Fall Detection App: Putting It Together

Building the fall detection app for Android

When the project started, I had little experience in Android app development. It was whooping four years ago at the time of writing this post. I built a couple of small apps for tracking sports activities before. However, they had not reached that level of maturity for releasing them publicly. They were more of first dives into Android development. So let’s have a look at how I did it back then.

Disclaimer: The app does not represent the current best practices for the development, but it nicely lays out, what needs to be done in the Android. Now, I would defenitely use some fancy stuff/good practices like dependency injection, MVVM, testing and other. In retrospective, every programmer would do things differently after the certain period of time. That is why, I will describe basic ideas behind the implementation and not the code itself. You can have a look at it in repository below.

The repository of the app is here: Repository

Assumptions

- The app needs to be easy to use because the elderly are the focus group

- All activity requires assessment. The app needs to run all the time in the background

- To differentiate between an active and inactive state

- The app is on only in a pocket o trousers or jacket to preserve battery and false positives

- if the fall happens, the app should start the alarm to attract surroundings and send an SMS message on its own

Design of the app

If the app is supposed to be used by the elderly, it needs to be pitch simple. That is why the app is composed of one single button. If you use the long press, the algorithm will get turned on/off. The colour signals the activity of the app too.

The notification represents the active algorithm and shows the current type of detection. The app detects falls, and car collisions or is inactive. The algorithm via the notification shorthand button can be stopped too.

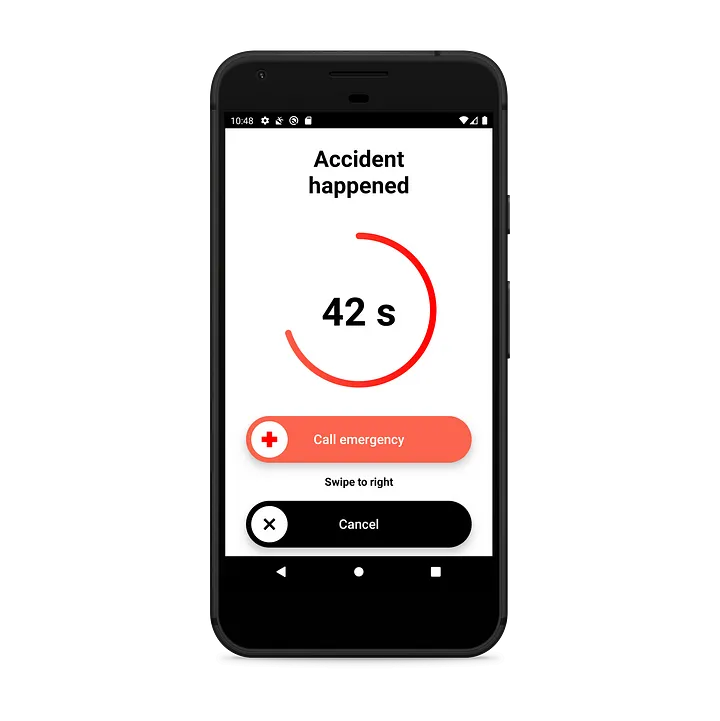

If an accident happens, the person needs to attract surroundings. Firstly if the app detects an accident, the phone needs to override the screen. The injured person should not be bothered by the locked screen, so the phone needs to override the lock and show the alarm screen. Similarly, your dial-up app handles your phone calls. Even though the phone is locked, you can still pick up your call.

In event of an accident, there can be people around. In another room, away a couple of meters. During the alarm, a high-tone sound is played on repeat. After a couple of seconds, the sound changes to evoke a change in mind and make sure that the person hears the alarm.

Unfortunately, the app cannot avoid false positive alarms. There needs to be a deterministic way to call an emergency immediately or cancel it. Simple buttons could be miss-clicked. Swipe buttons prevent the user from miss-clicking the correct action.

After one minute without a response, the phone sends the SMS automatically. The alarm also makes the phone vibrate and turns on/off the flashlight.

Unfortunately, automatisation of sending SMS messages via Android is getting harder and harder, because of the restrictions on the Google Play Store to avoid scams. (Good news for users / bad for some use cases)

After clicking the button

In Android, the Activity class covers all the UI interactions with the user. However, the app needs to run in the background. For this purpose, Android has ForegroundService represented by the notification. If the Android or user kills the app’s Activity, the ForegroundService preserves its state. (ForegroundService is a subtype of Service, so if I write Service, I still mean the ForegroundService type)

The Activity observes the Service for the state of the algorithm. To avoid direct binding of Activity to Service, they communicate via intense and broadcast receivers.

The algorithm is started by the Service. It connects to all the required sensors and detectors of the user’s activity.

Battery saving

Saving the battery is one of the most crucial aspects of mobile development because no one wants to use an app which drains the battery. To have computation power and sensors available, we need to use wakelock to lock the CPU and make it work after the screen is turned off. The CPU is one of the biggest battery drainers in the phone, so limiting it to specified periods is important.

Pocket constrain

The sensors and analysis are active only if the phone is in the pocket. Among the sensors, you can find a proximity sensor, which is hidden usually beside the front camera. It gives you information on the distance from the sensor to a range e.g. max 5 cm. In my case, all of the tested phones have binary behaviour. It was 0 cm or the max distance. So if the length is 0, then turn on the algorithm. If it is not 0, disable the algorithm because the phone is outside the pocket.

Activity constrain

Android provides Activity Recognition API, with which help we can constrain detection only if the person walks. The API can detect car riding, walking and other activities. The app can switch detection based on the movement. This option is optional and disabled by default. Activity recognition by Google

Time constrain

We could also consider time constrain as a schedule. Nevertheless, it was not implemented in the app.

GPS location

Before the algorithm can be launched, the user needs to allow localisation. If an accident occurred, but the app would not have access to GPS, there would be no point to which send help.

The GPS is invoked periodically, so the device tries to get new coordinates from time to time. If we were not doing it, the device could remember outdated coordinates as the last known location.

Sensors

The registration of the sensors is dynamic. If the app is required to work by having a phone in a pocket, then the Service registers the sensors required by the detector. The current detectors use only an accelerometer because a gyroscope is multiple times more battery-heavy.

How the sensor samples are rolling in, the last 10 seconds are kept in the memory of the app. If a new sample is added, the last one is forgotten.

At this time, the detector waits for the higher activity. If there is a potential risk of fall, the saved samples are duplicated and sent to the detector to analyse more precisely. The samples are still rolled in as before because at any other moment can happen another risky event.

If the detector results in the detection of the fall, the app triggers the alarm as it was mentioned. The implementation of the algorithm is just a translation of the Python algorithm into Java and the model is converted to the Java class copy.

This would be it for the Android app! I will more posts about car collisions or background processing on Android.